Project Overview

In an era where creative and practical applications of AI are continuously expanding, Pietra, a trailblazer in e-commerce solutions, sought to harness the power of generative AI models to enhance their online store offerings. This initiative aimed to conduct a meticulous comparative analysis of the Stability Diffusion and DALL-E models using advanced techniques. The project’s cornerstone was the development on AWS Sagemaker to evaluate these models side-by-side on a uniform set of user-provided prompts. The primary goal was to explore and document each model’s capabilities, efficiency, and practical application potential in various creative scenarios.

The project entailed establishing a Sagemaker environment, fine-tuning the Stability Diffusion model on relevant datasets from e-commerce domain, generating and analyzing results from identical prompts, and comparing these with the results from the DALL-E model. The goal was to extract actionable insights to guide strategic model deployment in business operations.

The Challenges

With the ever advancing Generative AI technology, there is a growing demand to leverage the best models; however, this brand-new creations come with challenges:

- Model Performance Uncertainty: It is often unclear which generative model delivers superior performance as they are tested across a variety of prompts and use cases. This uncertainty complicates the selection process for specific applications.

- Need for Fine-Tuning: There is a notable lack of insights on how fine-tuning techniques impact the performance of each model. This gap in knowledge makes it difficult to optimize models to meet specific needs effectively.

- Complexity of Comparative Analysis: Establishing a systematic and fair comparison between the models presents significant difficulties.

- Resource Constraints: The availability of computational resources for extensive model training/finetuning and evaluation is limited. This scarcity poses a substantial barrier to conducting thorough and continuous model improvements.

- User Satisfaction: The ultimate measure of a model’s success is whether the generative outputs satisfy user expectations and fit the intended application requirements. Ensuring this alignment is crucial but challenging, given the subjective nature of user satisfaction.

The Solution

To present a feasible model, state of the art models (DALL-E and Stability Diffusion various models, mainly SDXL) were compared via Jupyter Runbooks, to allow developers to test different approaches:

- Jupyter Environment Setup: Configured a Jupyter environment with necessary libraries and dependencies for both Stability Diffusion and DALL-E models.

- Data Preparation: Used a clothes image captioning dataset from Hugging Face to customize data and outperform DALL-E outputs.

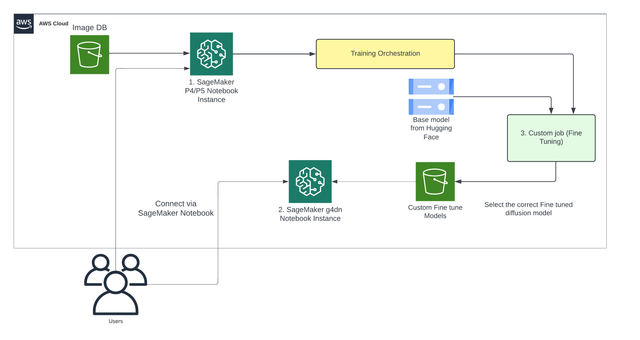

- Model Fine-Tuning: To ease the comparison as well as the training of the models, the following architecture diagram was employed:

Architecture Diagram for training and inference. Having a defined a diagram helps the comparison task.

Applied advanced fine-tuning techniques to the Stability Diffusion model, including:

- Textual Inversion

- LoRA Fine Tuning (a GPU-efficient technique)

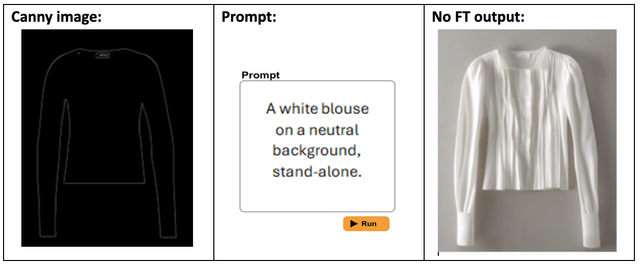

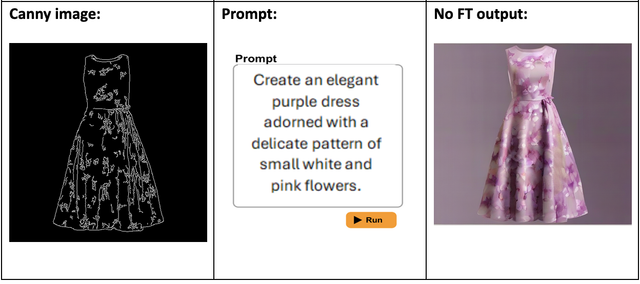

- ControlNet for image-to-image tasks

- Output Generation: Generated images and outputs for each prompt using both models.

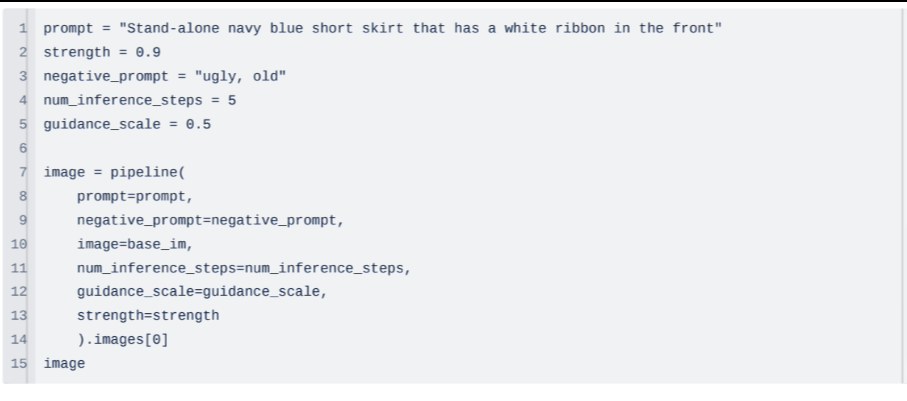

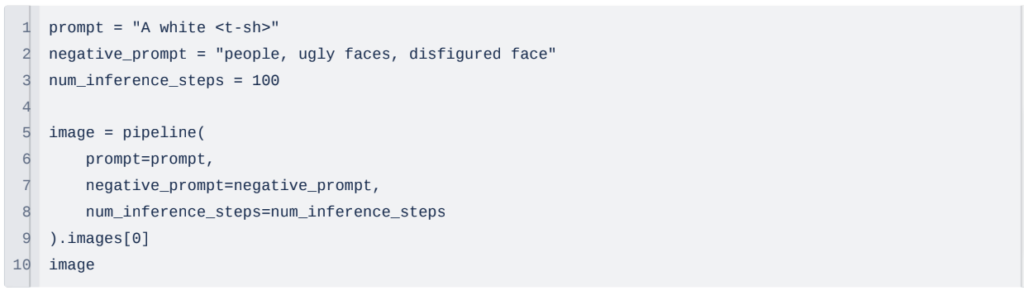

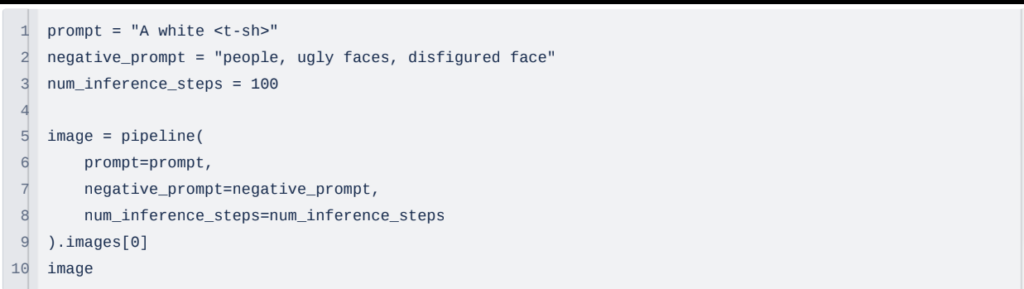

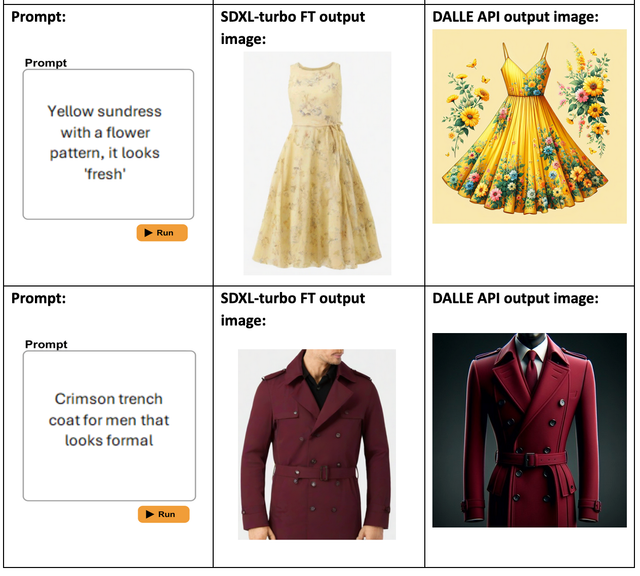

LoRA model:

- Text to image generation

Output :

Output :

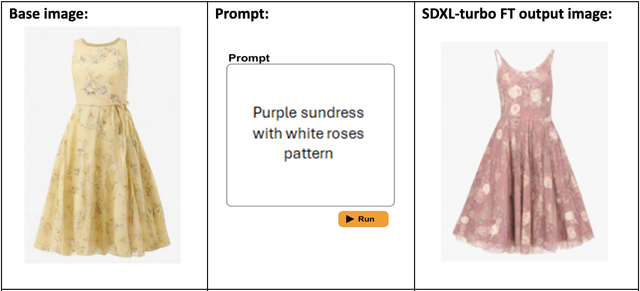

Textual Inversion

Output :

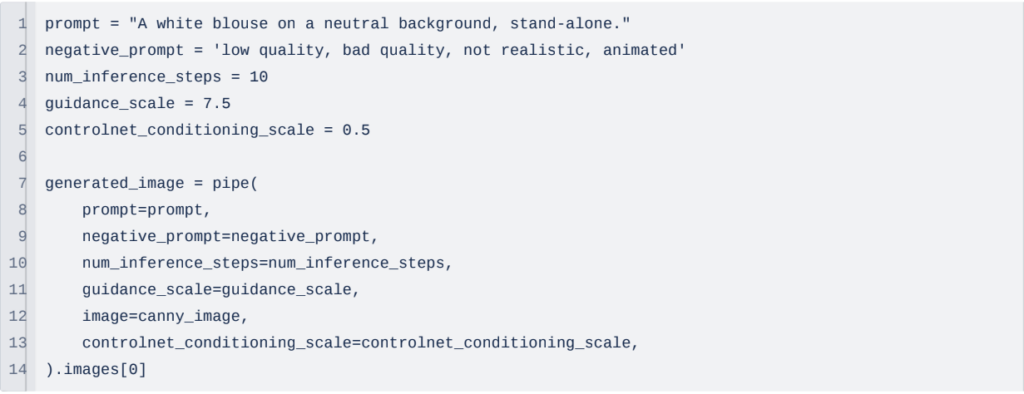

Control Net

Output :

- Result Analysis: Conducted a detailed analysis of the outputs, focusing on aspects such as quality, coherence, creativity, and computational efficiency

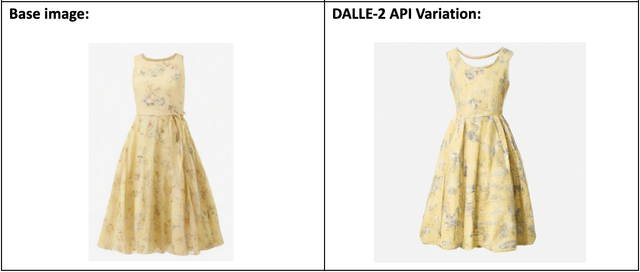

Generation with LoRA vs DALL-E:

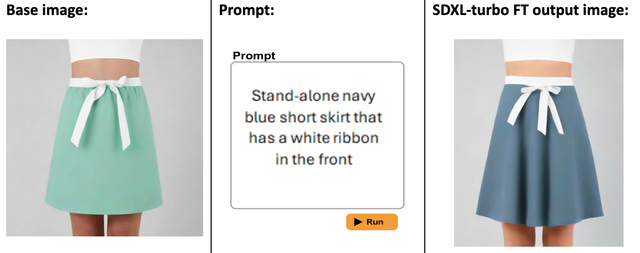

Image to image:

SDXL-turbo Fine Tuned with LoRA

ControlNet

DALL-E. It does not allow to add variations to the image with text, it is very restrictive.

- Virtual try-on with Stability Models:

Virtual try-on for men.

Virtual try-on for women.

- Visualization and Reporting: Used Jupyter Notebooks to visualize results and compile a comprehensive report highlighting findings and comparisons.

Information Architecture

It is also important to have a structured design for the Runbooks to ensure control as well as quick, efficient setup and replication:

- Notebook Structure: Divided notebooks into sections for setup, data preparation, model training, output generation, and analysis.

- Data Management: Organized prompt data and generated outputs in structured directories for easy access and reference.

- Documentation: Included detailed documentation within the notebooks to explain processes, methodologies, and findings.

- Result Storage: Stored generated outputs and intermediate results in cloud storage for scalability and easy sharing.

Value of the Project

Being at the forefront is what allows a company to be the highlight of their domain; incurring into a barely explored field is nothing short of innovative. By leveraging and understanding which models and techniques are the most impactful to the use case, the project provided significant value to the client:

- Performance Insights: Offered clear insights into the strengths and weaknesses of both Stability Diffusion and DALL-E models.

- Resource Efficiency: Demonstrated efficient use of computational resources through optimized training and evaluation processes.

- Enhanced Understanding: Improved the client’s understanding of generative AI capabilities and limitations.

- Strategic Decision-Making: Enabled informed decision-making for future AI projects and model selection.

- User Satisfaction: Ensured that the generated outputs aligned with user expectations and application needs.

- Ease of replication: Provided detailed steps with easy to use instructions that allows the user’s technical team to replicate the best results without having to go through a discovery phase.

Conclusion

This project, executed on AWS SageMaker, successfully demonstrated the power of fine-tuning the Stability AI model, which notably outperformed the DALL-E model. By deploying advanced SageMaker notebooks, we conducted rigorous evaluations that not only highlighted the enhanced performance of the Stability AI model but also its superior creative capabilities. This comparative study provided PieStraStudio with deep insights into the potential of customized AI solutions, enabling strategic, informed decisions for their ongoing and future AI initiatives. Overall, the project epitomized the transformative power of tailored AI technologies, leveraging the advanced capabilities of AWS SageMaker to fulfill and exceed the client’s strategic objectives.