In today’s digital landscape, businesses are increasingly relying on high-quality visuals to engage audiences, enhance branding, and streamline workflows. From marketing campaigns to product design, the ability to create high-quality visuals quickly gives businesses a competitive advantage. Stability AI, integrated with Bedrock, has been a game-changer in this space. With powerful tools, such as Stable Diffusion 3.5, companies can now leverage faster, more accurate, and visually stunning outputs to meet their diverse creative needs, driving innovation and improving operational efficiency.

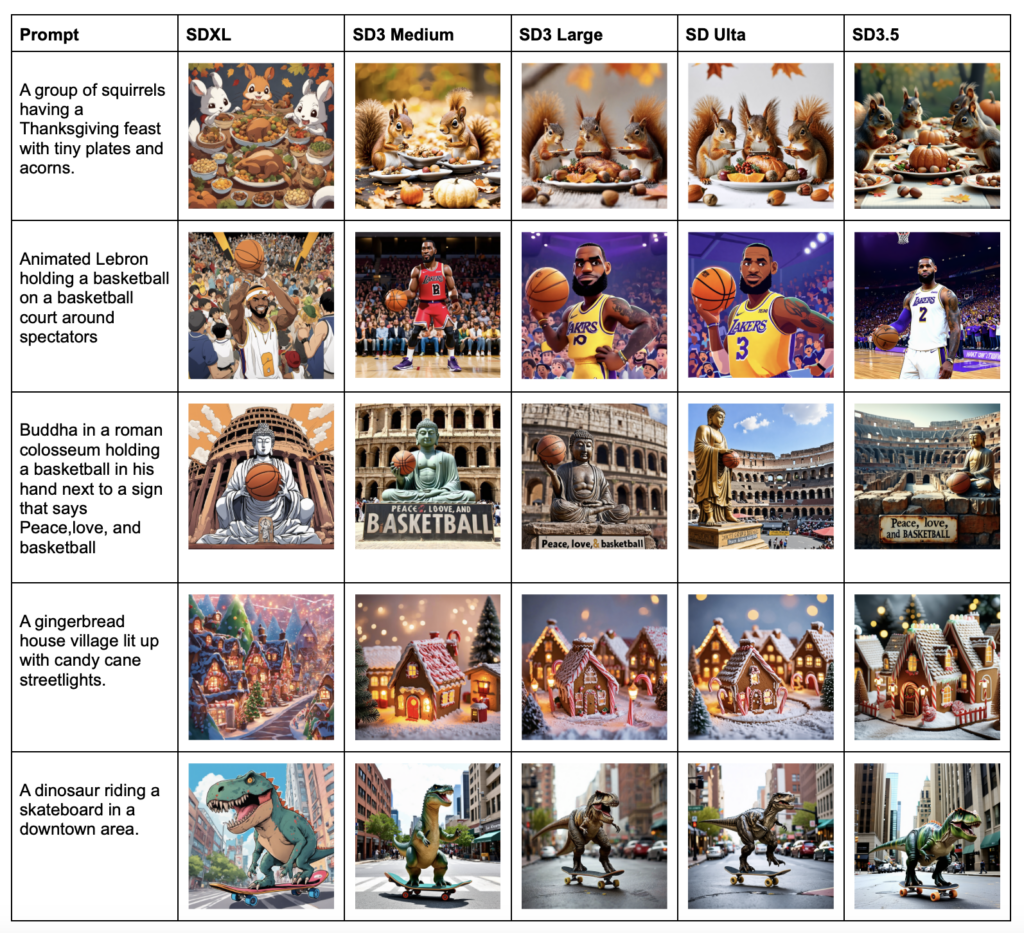

What Are SDXL, SD3 Medium, SD3 Large, Stable Image Ultra and SD3.5 Large?

These diffusion models represent a progression in Stability AI’s commitment to delivering cutting-edge image generation technology:

- Stable Diffusion XL (SDXL): Building upon earlier versions, SDXL introduced a larger UNet backbone and dual text encoders, enhancing the model’s ability to generate high-resolution images with improved detail and fidelity.

- Stable Diffusion 3 Medium (SD3 Medium): This model introduces the Multimodal Diffusion Transformer (MMDiT) architecture, offering improved image quality, typography, and complex prompt understanding. With approximately 2.5 billion parameters, it is optimized for consumer hardware, balancing performance and accessibility

- Stable Diffusion 3 Large (SD3 Large): Building upon the MMDiT architecture, SD3 Large scales up to 8 billion parameters, delivering superior image quality and prompt adherence. It is designed for professional use cases requiring high-resolution outputs.

- Stable Image Ultra: As Stability AI’s flagship model, Stable Image Ultra combines the power of SD3 Large with advanced workflows to produce the highest quality photorealistic images. It is tailored for industries demanding exceptional visual fidelity, such as marketing, advertising, and architecture.

- Stable Diffusion 3.5 Large (SD3.5 Large): An evolution of SD3 Large, SD3.5 Large maintains the 8 billion parameter scale while incorporating refinements for enhanced prompt adherence and image quality. It continues to utilize the MMDiT architecture.

Although full access to the model offers full control and customization, Bedrock provides a convenient alternative for faster, reliable image generation through AWS infrastructure. This allows users to integrate advanced image generation capabilities into their applications seamlessly, without managing the complex backend, enabling faster and scalable deployments.

Currently, developers can access SDXL, SD3 Large, and Stable Image Ultra via Bedrock API for high-quality, photorealistic image creation. While models like SD3 Medium and SD3.5 Large are not yet available on Bedrock, users can run these advanced models on EC2 instances. With tools like ComfyUI, they can customize their prompts and output pipelines, creating a powerful environment for high-performance image generation.

Calling the Models using Bedrock

To call these models using the Bedrock API, you can set up a simple script in Python. This example demonstrates how to interact with the API to generate an image using the SD3 Large model. First, initialize the Bedrock client using boto3 , and then invoke the model with a prompt. The response contains the image encoded in base64, which can be decoded and displayed using Python’s Pillow library.

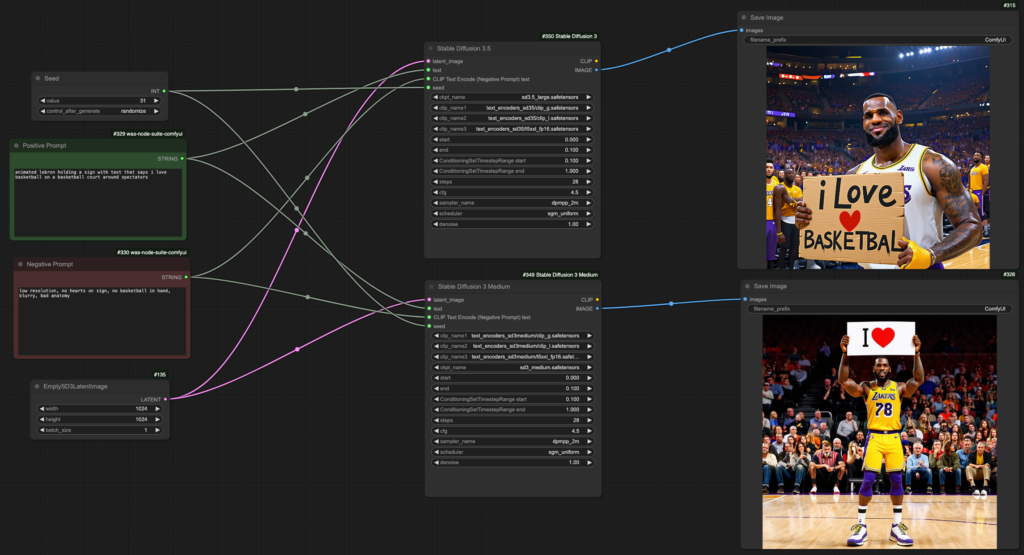

SD3 Medium and SD3.5 Large Inference with ComfyUI

To perform inference with SD3 Medium and SD3.5 Large, we need to set up an EC2 instance with sufficient VRAM. In this case, a g6e.4xlarge instance is suitable as it provides the necessary GPU resources for handling these large models. After launching the instance, the next step is to install ComfyUI, a flexible and user-friendly interface for managing Stable Diffusion models. Once ComfyUI is installed, we download SD3 Medium and SD3.5 Large model files directly from Hugging Face, ensuring we have all the required models and resources to construct a functional ComfyUI workflow. This includes not only the main model files but also any additional components, like VAE (Variational Autoencoder) models or tokenizers, which are essential for optimal performance and image quality. With the setup complete, we can run custom prompts and explore the full potential of these advanced models in a controlled, high-performance environment.

Here are some examples of text-to-image generations, along with the prompts used:

The Future of Image Generation

Beyond accessibility, SD 3.5 builds on the strengths of its predecessor with enhanced performance and versatility. Its improved speed and contextual accuracy make it a valuable asset for businesses and creators looking to produce high-quality visuals under tight deadlines. Moreover, the model’s adaptability ensures it can cater to a wide array of industries, from entertainment and marketing to education and e-commerce.

This open-access approach, combined with SD 3.5’s advanced capabilities, positions it as a powerful tool and a likely favorite for those looking to build on the legacy of SDXL. As the demand for fast, efficient, and customizable content creation tools continues to grow, SD 3.5 is set to play a pivotal role in shaping the future of image generation.

import boto3

import json

import base64

import io

from PIL import Image

# Initialize Bedrock client

bedrock = boto3.client('bedrock-runtime', region_name='us-west-2')

# Invoke model with a prompt

response = bedrock.invoke_model(

modelId='stability.sd3-large-v1:0',

body=json.dumps({

'prompt': 'A scenic landscape with mountains and a lake',

'negative_prompt': 'blurred',

'seed': 42

})

)

# Process the output image

output_body = json.loads(response["body"].read().decode("utf-8"))

base64_output_image = output_body["images"][0]

image_data = base64.b64decode(base64_output_image)

image = Image.open(io.BytesIO(image_data))

image.show()