Abstract

The rapid evolution of Generative Artificial Intelligence (GenAI) has catalyzed transformative changes across various industries, necessitating the development of robust frameworks that cater to the growing demands of efficiency, security, and feature richness. Langchain has emerged as a prominent platform for developers aiming to construct and deploy GenAI applications, boasting over 93.2 thousand stars on GitHub and more than 20 million downloads. Despite its widespread adoption, the increasing complexity of AI projects underscores the limitations inherent in existing frameworks such as Langchain, particularly concerning speed, simplicity, and security.

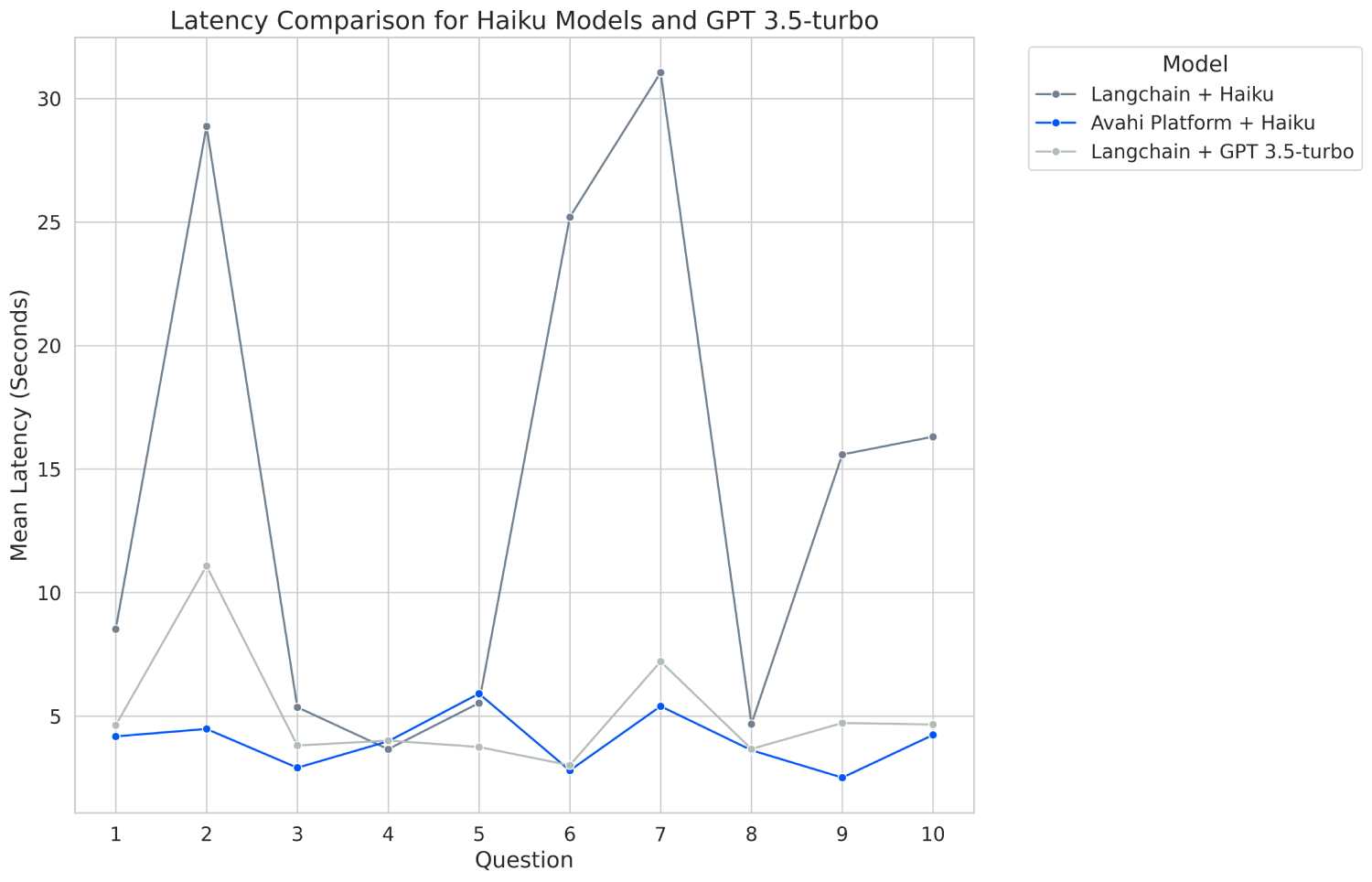

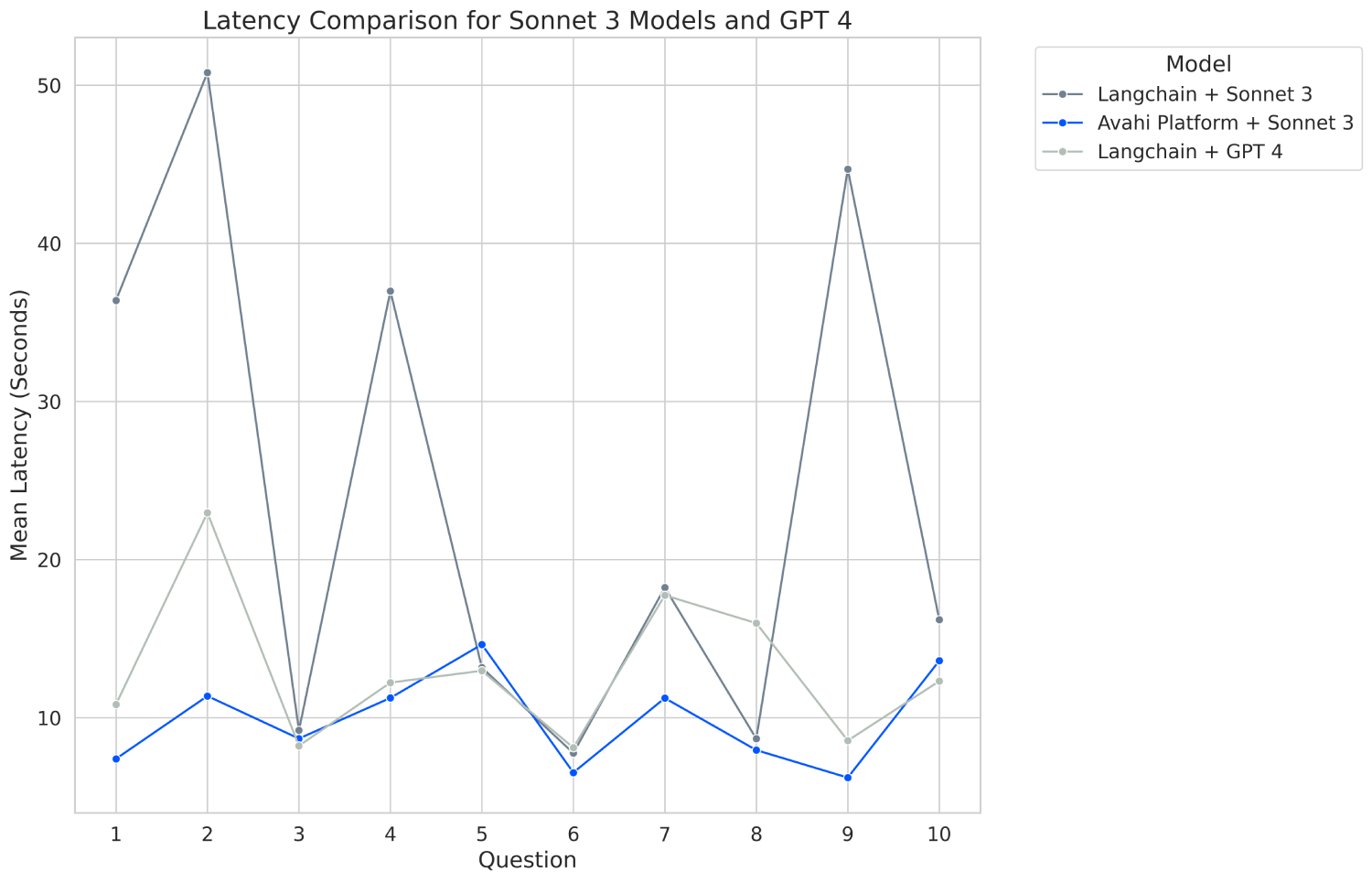

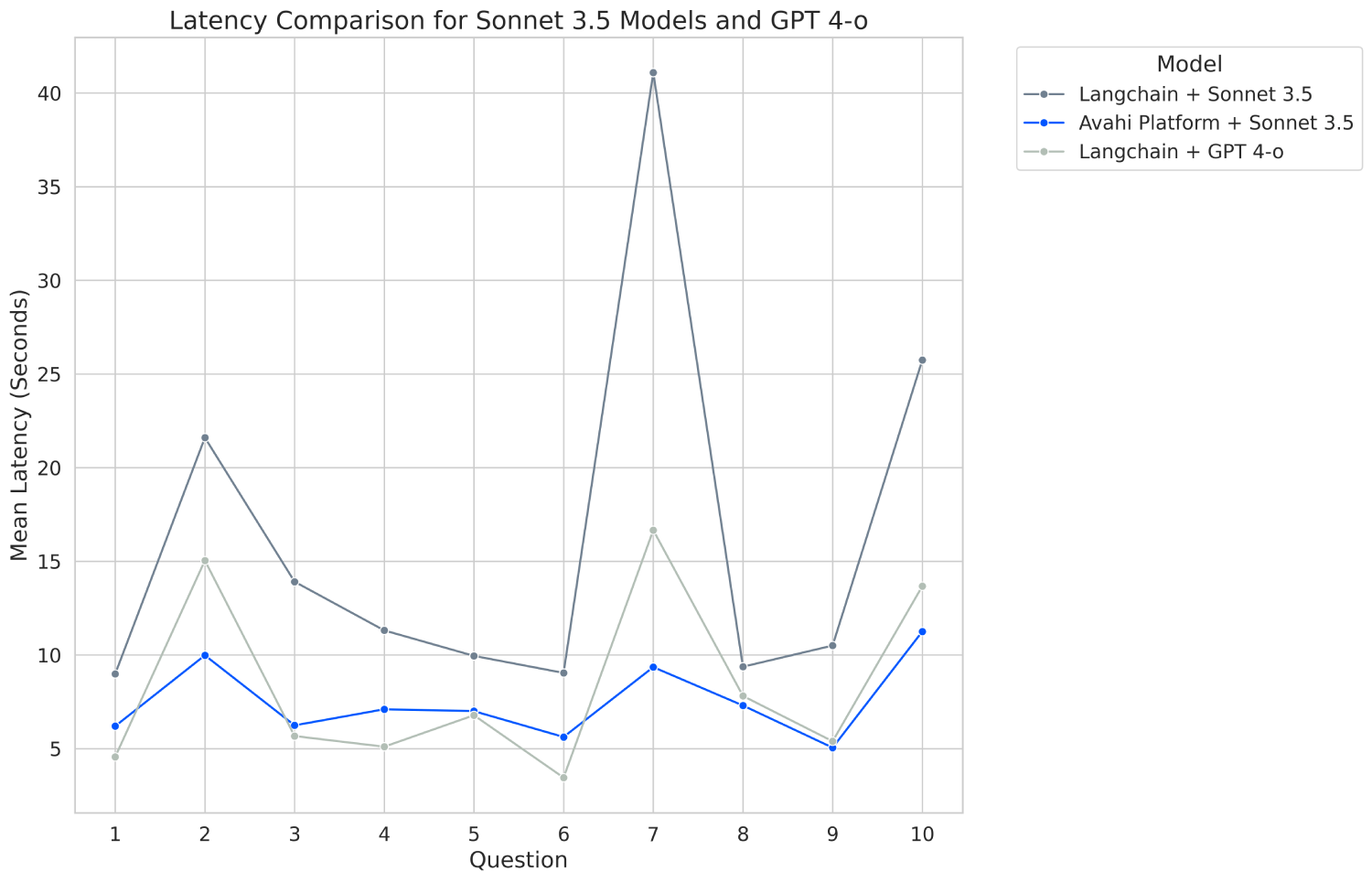

In this blog, we introduce AvahiPlatform’s CSV Query; AvahiPlatform is a state-of-the-art Python SDK designed to surpass the capabilities of existing solutions like Langchain. It’s CSV Querying capabilities achieves up to a 61% reduction in latency when compared to Langchain’s famous pandas agent. Operating entirely within the secure AWS environment, Avahi Platform significantly enhances data security and compliance, addressing critical concerns in AI development.

Our comprehensive evaluation involves rigorous performance testing using Amazon Bedrock’s advanced models—Haiku 3.0, Sonnet 3.0, and Sonnet 3.5— and OpenAI similar models—GPT 3.5, GPT 4, and GPT 4-o— across 10 different complex queries. The results demonstrate AvahiPlatform’s superior performance and streamlined development experience. We conclude that Avahi Platform CSV Query is a viable solution that outperforms the most common tool for CSV Querying.

Introduction

Background

The advent of Generative AI has ushered in a new era of automation and intelligence, with applications ranging from natural language processing to image generation. Frameworks like Langchain have played a pivotal role in democratizing AI development, providing tools that simplify the integration of AI capabilities into applications. Langchain’s prominence is reflected in its substantial GitHub community and widespread usage, becoming the de facto choice for many developers.

However, as AI applications become more sophisticated, the limitations of existing frameworks become apparent. Developers face challenges related to performance inefficiencies, complex coding requirements, and security vulnerabilities, particularly when handling sensitive data or operating in regulated industries.

Motivation

The motivation behind Avahi Platform stems from a clear need for an advanced framework that addresses these challenges head-on. Avahi Platform seeks to provide a solution that not only matches but exceeds the capabilities of existing tools like Langchain. By focusing on speed, simplicity, and security, Avahi Platform aims to streamline the development process, enhance application performance, and ensure robust data protection.

By operating entirely within the AWS ecosystem and leveraging Amazon Bedrock’s advanced models, Avahi Platform offers an integrated environment that supports the latest AI innovations while adhering to stringent security standards. This alignment with AWS services ensures that data remains within a secure and compliant infrastructure, mitigating risks associated with data breaches and unauthorized access.

Objectives

The primary objective of this study is:

- Performance Evaluation: To quantitatively assess AvahiPlatform’s performance compared to Langchain in terms of latency across various GenAI tasks.

Methodology

Evaluation Criteria

To provide a comprehensive comparison between Avahi Platform and Langchain, we conducted experiments based on the following criteria:

- Latency: The time taken to execute specific GenAI tasks, measured in seconds. This metric evaluates processing efficiency and responsiveness.

- Complex query: A query that requires more than one field of the provided CSV file as well as at least three coding commands: either goruping per values or having applied operations to the CSV rows. There is also little info given from the query itself: the main tool (Langchain/Avahi Platform) should provide enough context to the LLM to generate a coherent, accurate response.

- Number of Runs: We ran the experiment 20 times for each query and took the average, ensuring that we cover all edge cases and scenarios. This approach allows us to present results based on 20 runs for each question, rather than relying on a single run.

Experimental Setup

Environment Configuration

All experiments were conducted within the AWS ecosystem to ensure consistency and reliability. For both Avahi Platform and Langchain, we utilized Amazon Bedrock’s advanced large language models (LLMs):

- Haiku 3.0

- Sonnet 3.0

- Sonnet 3.5

A comparison with the most popular competitor, OpenAI, was carried out using the models:

- GPT 3.5

- GPT 4

- GPT 4o

Consistency Measures

To maintain fair comparisons:

- Hardware Specifications: Tests were conducted on equivalent computational resources(AWS Sagemaker) to eliminate hardwareinduced variability.

- Data Preparation: The same dataset was used for all tasks, ensuring consistency in input across both platforms

Evaluated Task

CSV Querying: Interpreting natural language queries to extract information from CSV data.

Results

Latency Comparison

Latency was measured by timing the execution of each task from initiation to completion. The results are presented in Table 1

Table: Latency Comparison (in seconds)

| Question | Langchain + Haiku | Avahi Platform + Haiku | Langchain + GPT 3.5-turbo | Langchain + Sonnet 3 | Avahi Platform + Sonnet 3 | Langchain + GPT 4 | Langchain + Sonnet 3.5 | Avahi Platform + Sonnet 3.5 | Langchain + GPT 4.0 |

| 1 | 4.68 | 3.62 | 3.67 | 8.67 | 7.95 | 15.98 | 9.37 | 7.3 | 7.81 |

| 2 | 15.59 | 2.51 | 4.72 | 44.69 | 6.21 | 8.55 | 10.5 | 5.05 | 5.39 |

| 3 | 536 | 2.91 | 3.81 | 9.2 | 8.68 | 8.22 | 13.91 | 6.24 | 5.67 |

| 4 | 28.87 | 4.48 | 11.08 | 5.08 | 11.37 | 22.96 | 21.6 | 9.98 | 15.04 |

| 5 | 31.05 | 5.4 | 7.21 | 18.23 | 11.24 | 17.74 | 41.09 | 9.35 | 16.66 |

| 6 | 16.31 | 4.24 | 4.66 | 16.2 | 13.6 | 12.31 | 25.74 | 11.25 | 13.67 |

| 7 | 25.2 | 2.8 | 3.01 | 7.76 | 6.53 | 8.1 | 9.04 | 5.62 | 3.45 |

| 8 | 8.52 | 4.18 | 4.63 | 36.39 | 7.4 | 10.84 | 8.99 | 6.2 | 4.56 |

| 9 | 5.53 | 5.91 | 3.75 | 13.17 | 14.63 | 12.97 | 9.95 | 7 | 6.78 |

| 10 | 3.66 | 3.98 | 4.01 | 36.98 | 11.25 | 12.22 | 11.31 | 71 | 5.1 |

Reliability

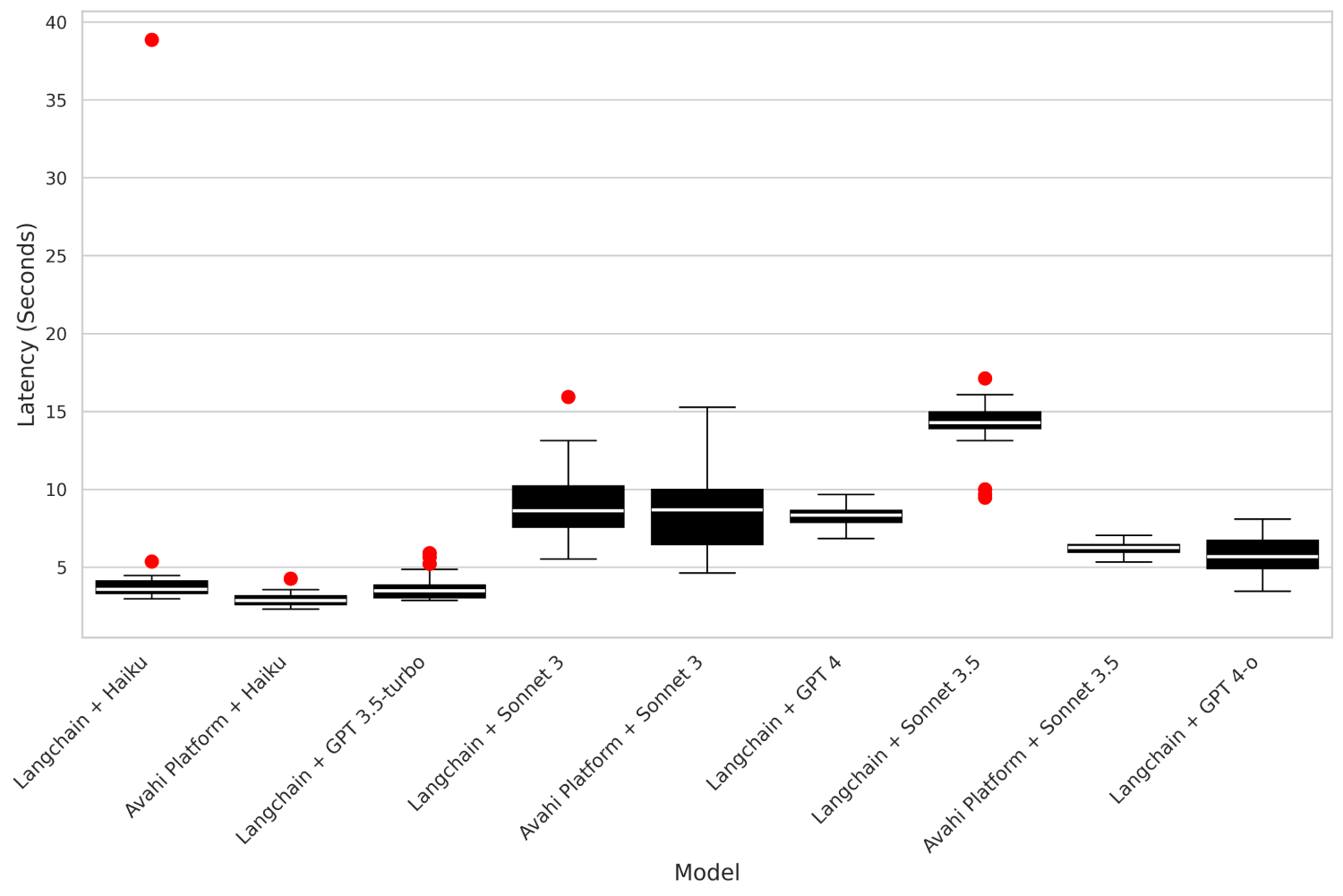

Latency across 20 experiments for the question “Get the exam score average for all the genders with and

without Learning Disabilities“

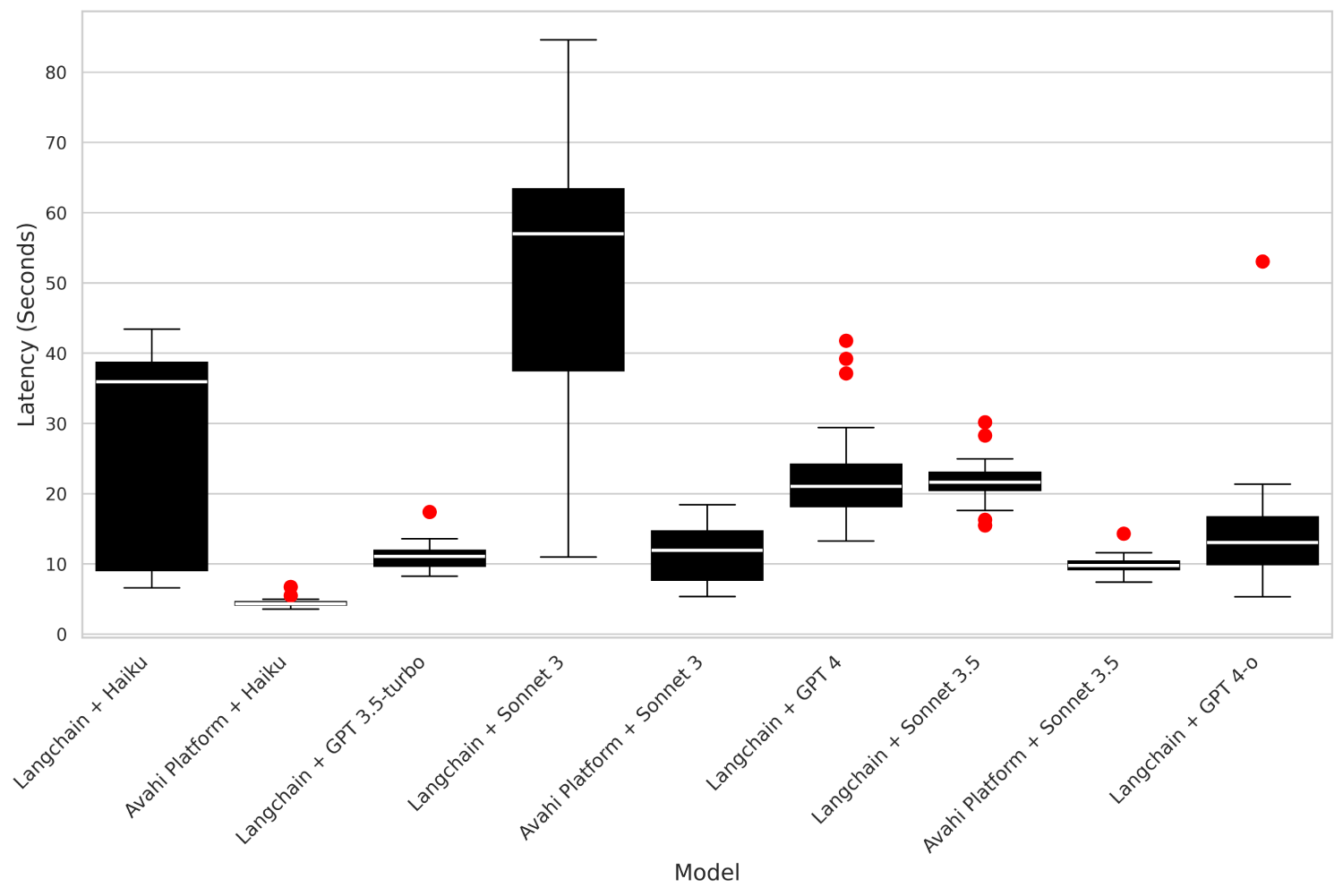

Latency across 20 experiments for the question “How does peer influence correlate to Parental Education

Level for students attending Public schools?“

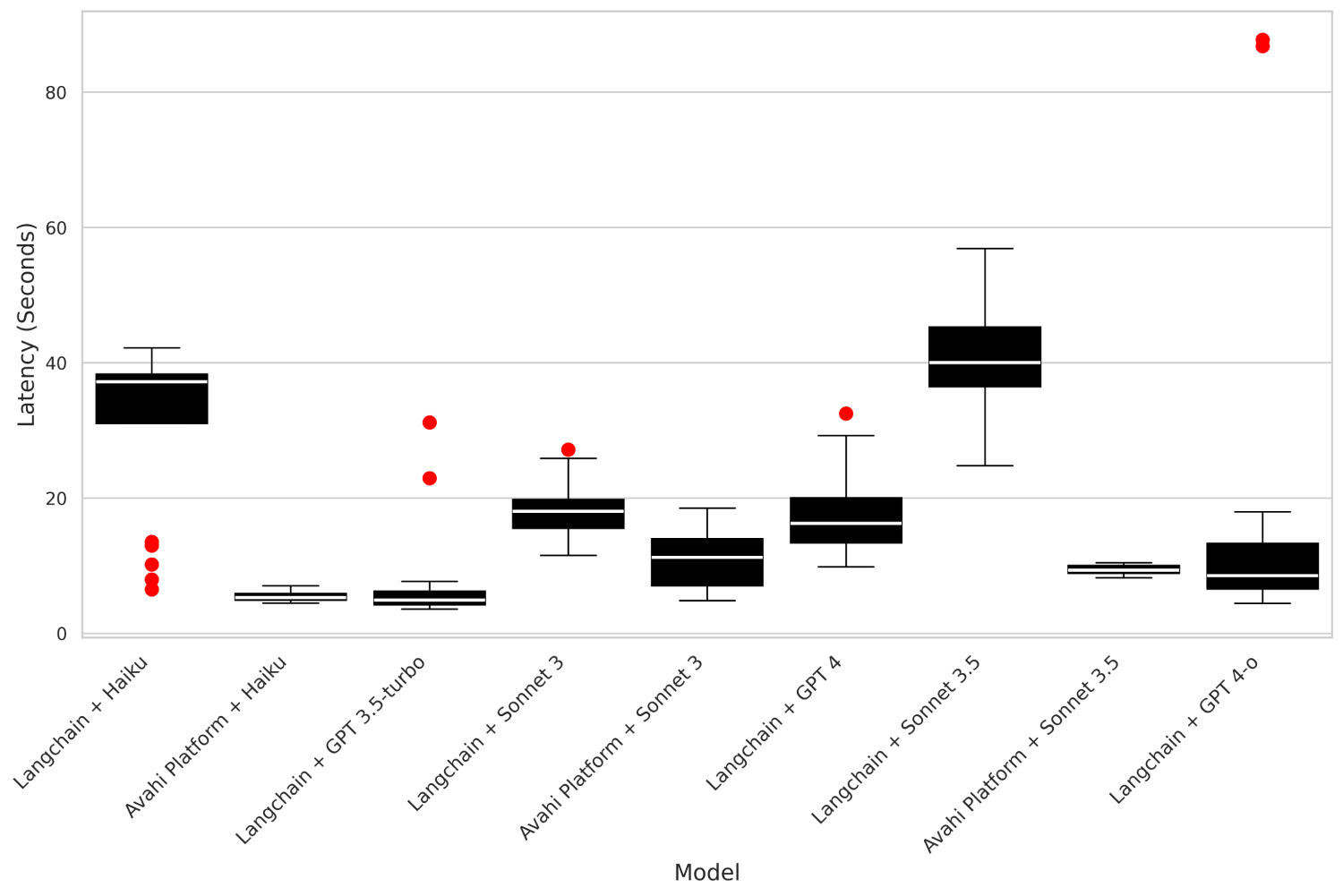

Latency across 20 experiments for the question “Who have a bigger average on Physical Activity, students

with internet access AND more than 2 Tutoring sessions OR students with no internet access AND 2 or less

Tutoring sessions?“

- Lower Latency: Avahi Platform consistently displays a lower median latency in the CSV Query task, meaning it is completed faster on average. This makes it a better option for real-time or performance-critical applications.

- Tighter Distribution: This tight distribution indicates that the platform delivers a more consistent response time, essential for applications that require stable execution times.

- Fewer Outliers: Avahi Platform also produces fewer latency outliers, indicating that it is less prone to unexpected performance slowdowns. This ensures users can trust the platform to provide steady results under various conditions.

Security and Network Advantages

Operating entirely within the AWS environment, AvahiPlatform leverages AWS’s comprehensive security measures, offering the following advantages:

- Data Integrity: All operations are confined within AWS, ensuring that data is not exposed to external servers or used for unintended purposes.

- Compliance: AvahiPlatform adheres to industry standards and compliance regulations (e.g., GDPR, HIPAA), benefiting from AWS’s certifications and compliance programs.

- Reduced Attack Surface: By minimizing external data movement and relying on AWS’s secure infrastructure, the risk of data breaches and unauthorized access is significantly reduced.

In contrast, Langchain may involve interactions with external APIs or services, potentially introducing vulnerabilities or compliance challenges.

Discussion

Performance and Efficiency

The latency improvements observed in AvahiPlatform can be attributed to its optimized integration with AWS services and the efficient handling of model invocations. By reducing overhead and streamlining operations, AvahiPlatform delivers faster responses, which is real-time applications and enhances user experience.

Security Enhancements

Data security is paramount in today’s interconnected world. Avahi Platform’s exclusive operation within the AWS environment ensures that data remains protected under AWS’s robust security protocols. Sensitive information is handled with care, benefiting from encryption, secure authentication mechanisms, and compliance with international standards.

By minimizing data exposure and eliminating the need to transmit data to external services, Avahi Platform reduces the risk of data breaches. This is particularly important for industries dealing with confidential information, such as healthcare and finance.

Developer Experience

Avahi Platform’s emphasis on simplicity and ease of use lowers the barrier to entry for AI application development. Developers can quickly prototype and deploy applications without deep expertise in AI or extensive coding. The provision of default configurations and integration with familiar tools further enhances the developer experience.

Conclusion

Avahi Platform represents a significant advancement in GenAI frameworks, addressing key limitations of existing solutions like Langchain. Through rigorous testing and evaluation, we have demonstrated that AvahiPlatform offers:

- Improved Performance: Achieves up to <insert-value> reduction in latency across common tasks, enhancing application responsiveness.

AvahiPlatform’s alignment with AWS services and utilization of Amazon Bedrock’s advanced models ensure that developers have access to cutting-edge AI capabilities within a secure and efficient framework. By simplifying the development process and enhancing performance, Avahi Platform empowers developers to focus on innovation and accelerate the deployment of AI solutions.

As AI continues to permeate various aspects of technology and industry, frameworks like Avahi Platform will play an essential role in facilitating this growth. Future work may involve further optimization, expanding feature sets, and exploring integrations with other cloud services to continue enhancing AI application development.

References

Langchain GitHub Repository. [ GitHub – langchain-ai/langchain: Build context-aware reasoning applications ]( GitHub – langchain-ai/langchain: Build context-aware reasoning applications )

Langchain AWS GitHub Repository. [ GitHub – langchain-ai/langchain-aws: Build LangChain Applications on AWS ]

Amazon Bedrock Documentation. [ Build Generative AI Applications with Foundation Models – Amazon Bedrock – AWS ]( Build Generative AI Applications with Foundation Models – Amazon Bedrock – AWS )

AvahiPlatform GitHub Repository. [ GitHub – avahi-org/avahiplatform: With AvahiPlatform, you can create and deploy GenAI applications on Bedrock in just 60 seconds. It’s that fast and easy! ]

( GitHub – avahi-org/avahiplatform: With AvahiPlatform, you can create and deploy GenAI applications on Bedrock in just 60 seconds. It’s that fast and easy! )

Latency and lines of code derived from: https://github.com/avahiorg/avahiplatform/blob/main/Test/latency_test/CSV_langchain_tests.ipynb